Machine systems are known to give out what you give them. If there is a bias anywhere, the system will have one as well. This is why Google want to prevent such situations with what it termed “Equality of Opportunity.”

The systems that machines use for learning are mainly for prediction by learning the characteristics of different data given so that when a new one comes, it will assign it to any of the several buckets. Image recognitions system will concentrate on learning the difference between cars and then assign such names as “pick up”, “bus”, “sedan,” and others.

It is impossible not to have mistakes. Check out the case with EI Camino or BRAT. Whatever conclusion the computer arrives at will be wrong because there isn’t enough data for that kind of vehicle. So the result of such mistake will be trivial. But in a situation where the computer is not sorting cars but people and placing them in categories such as defaulters of home loan? People that do not come under the common parameters will ultimately be termed foul. This best describes how machine learning process works.

Moritz Hardt, Google’s Brain in a post said that when group membership is linked with an attribute that is sensitive to gender, race, religion, disability, the outcome of such situation can be prejudicial outcomes. Hardt noted that the machine has not be given a methodology to handle such sensitive attributes.

Hardt, Nathan Srebro, and Eric Price had to write a paper on how the outcome of such situation can be avoided.

The logic behind it is that when there is an outcome that is desired and when an attribute has the potential to affecting the result, what will happen is that the algorithm will automatically adjust itself so that the proportions are similar regardless of the outcome. So this way, the computer will learn better.

A model will be created to make the predictions more accurate and will not be all about goosing numbers so that they can be politically correct. If for instance one of the attributes are not important like the someone’s religion, etc, you simply include it.

This is indeed a thoughtful thing for Google to be looking into section knowing that machine is taking over industries at the moment. It is quite important to know the risks and limitations that new technologies have such as this subtle one we have looked into.

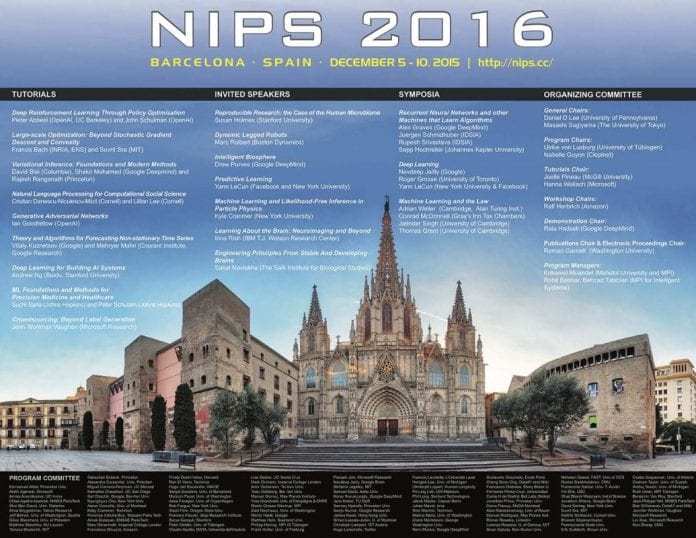

The papers the authors are working on will be presented at the Neural Information Processing Systems conference in Barcelona.